MinigameCore Framework

One of the primary goals for Super Bionic Bash is to support an ever-expanding catalog of minigames.

To avoid reinventing the wheel with each new addition, I developed MinigameCore, a custom UE5 plugin that provides

a robust, extensible foundation for minigame development. It includes a suite of tools and systems designed to streamline creation, reduce repetitive work,

and provide a modular, scalable structure. With this plugin, new minigames can be rapidly prototyped and integrated without having to build common functionality from scratch.

MinigameCore is made almost entirely in C++ for speed, efficiency, and access to deeper engine functionality.

However, the entire framework is fully Blueprint-friendly,

making it accessible and intuitive for both programmers and designers to work with.

Minigames can be made entirely within Blueprints by leveraging the provided features in MinigameCore.

MinigameCore still allows for full or partial C++ usage in minigames for flexibility.

One of the main strengths of MinigameCore is its modular design. As a plugin, it can be dropped into new, standalone

Unreal projects with minimal setup. This gives minigame creators the freedom to prototype in lightweight, isolated environments,

without the overhead of the full Super Bionic Bash project. Once ready, MinigameCore-powered minigames can be easily migrated back into the main game.

MinigameCore provides many out-of-the-box systems essential for every Super Bionic Bash minigame, including:

- Practice Mode: Lets players rehearse with looping gameplay and instructional overlay. Ends when all players ready up.

- Player Spawning & Team Management: Built-in support for spawning players in different gamemodes (Free-for-All, 2v2, or 3v1) with automatic team assignment.

- Scorekeeping: Provides a modular point tracking system that calculates standings at the end of the round. Can be extended for custom scoring logic.

- Minigame UI Widgets: Handles spawning and lifecycle management of UI widgets, so designers only need to on the UI. No boilerplate setup required.

- Camera & Splitscreen Support: Supports flexible camera setups for both split-per-player and split-per-team views. Each screen segment gets its own minigame UI copy.

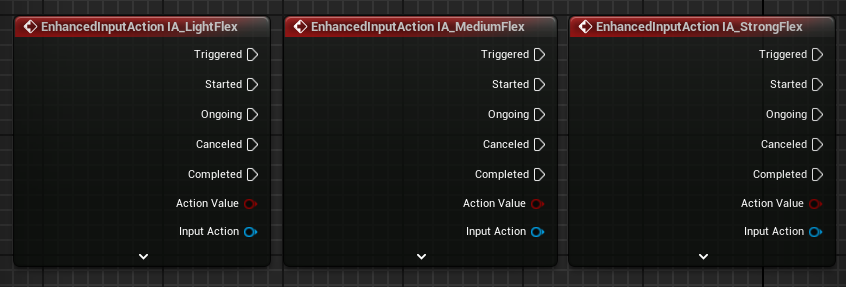

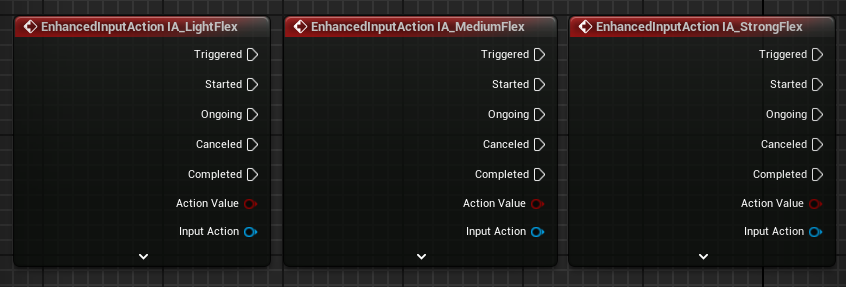

- Abstracted Custom Input: Exposes flex and motion sensor data as Blueprint-friendly input mappings using Unreal’s Enhanced Input system and custom actor components.

- Board Integration: Handles automatic reporting of minigame results to the main board game. Transitions back to the board are fully automated.

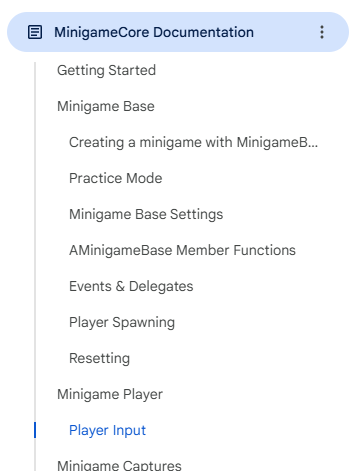

MinigameCore also includes full technical documentation that covers all available features, usage examples, and intended development workflows.

This ensures that both programmers and designers can quickly make the most of the system.

One example of a minigame built using MinigameCore is a cannon shooting game. Players use motion controls to aim their cannon and flex to fire.

Harder flexes shoot the cannon's projectile further which can overshoot or undershoot the targets, combining precise flexing with careful aim.

International Game Jam

As part of my work with Super Bionic Bash, I had the opportunity to help organize and participate in an international game jam in collaboration with

the University of Skövde in Sweden. The jam brought together 20 students from the university, forming four development teams.

This event was funded by a grant from the U.S. Department of State, highlighting its international and educational significance.

As this jam was primarily intended to generate new minigames for Super Bionic Bash, the Minigame Core system was essential to the event's

success. I developed played a central role in the event's success. I designed MinigameCore with accessibility in mind and worked closely with novice developers

before the jam to ensure the system was intuitive and easy to adopt, even for those with limited Unreal Engine experience.

During the jam, I provided MinigameCore tech support, assisted teams with general Unreal issues, and gathered feedback on my system. The event not only

produced minigames to be used in Bash but also revealed valuable insights for future improvements on MinigameCore, allowing future development to be faster, easier,

and more creative. It also validated some design choices I made with MinigameCore, such as prioritizing Blueprint support with the expectation that minigame creators will

not have working C++ knowledge.

EMG/Bluetooth

As part of the work with the games, I helped develop the Bluetooth backend for a custom controller that uses electromyography (EMG) to detect

the player's muscle flexion. The controller broadcasts these signals via Bluetooth to the game, where my system manages multiple connections, handles reconnections,

translates raw flex values into usable in-game input, and automatically assigns controllers to players. The backend is also designed to support platform-specific

Bluetooth APIs, allowing flexible deployment and seamless integration across different devices.

I also designed and implemented several algorithms to improve the usability of EMG input for gameplay.

The first handles calibration, ensuring the player is able to reach the full range of values from the EMG controller.

The second processes real-time EMG signals to detect flex patterns and categorize them into discrete intensity levels (i.e. light, medium, hard flex).

This allows minigames to react to threshold-based inputs rather than noisy raw values. This also mirrors the behavior of the EMG-powered prosthetic arms, which also uses

a threshold-based approach.

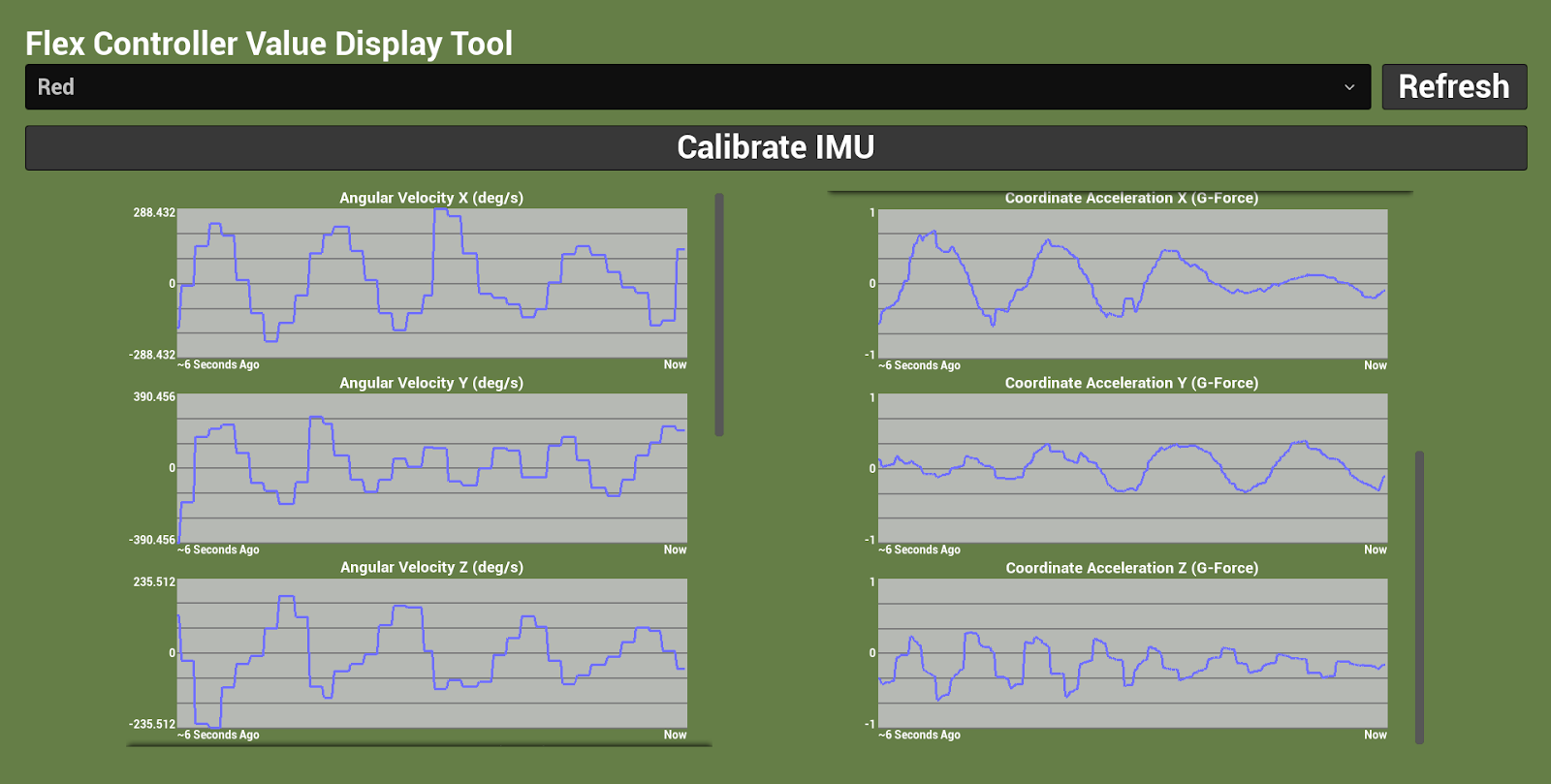

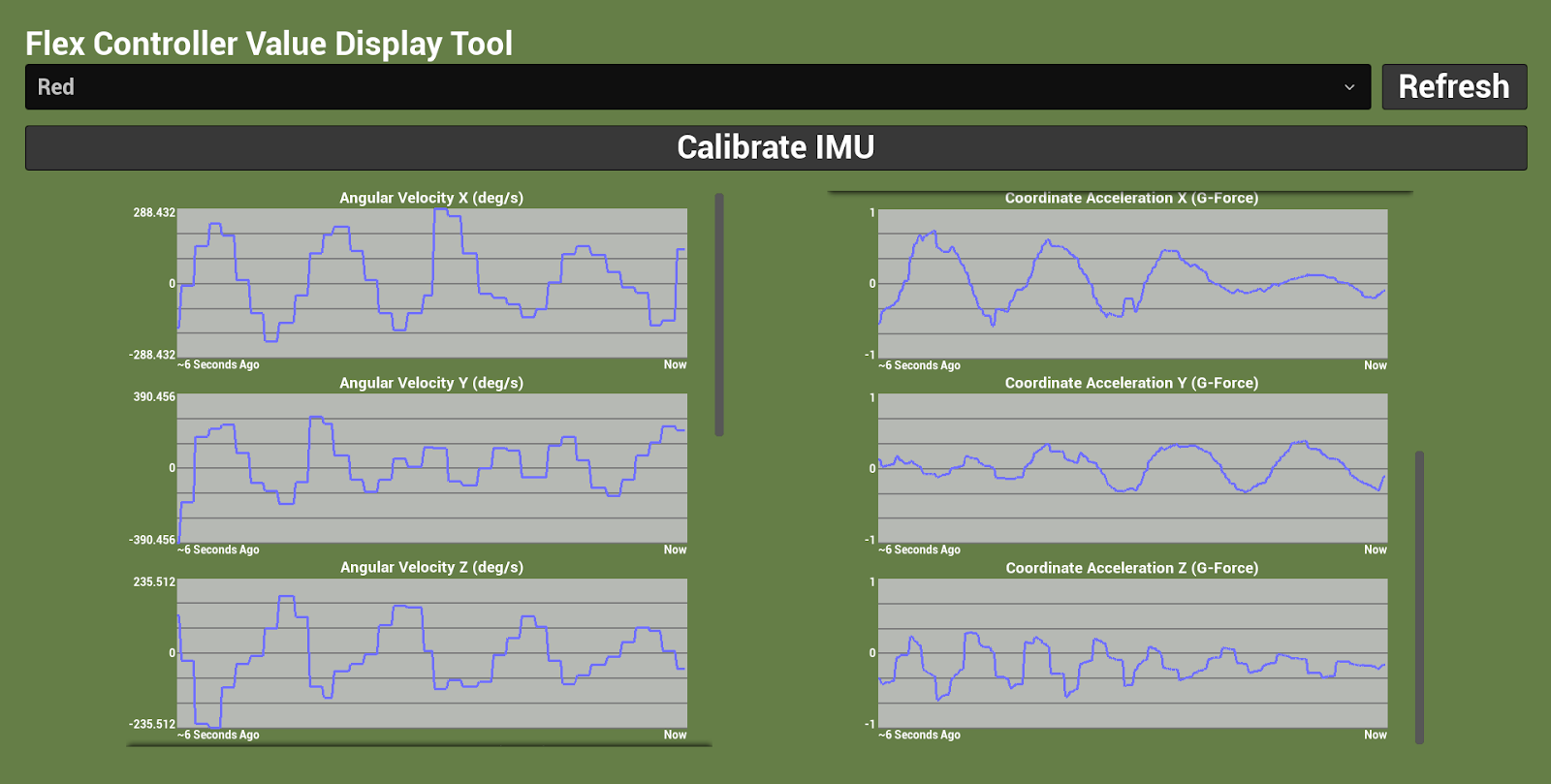

I also developed editor tools to support testing, debugging, and integration of the EMG controllers within Unreal Engine.

One example is a flex value graph, which plots recent EMG signal data over time.

This tool helps developers observe trends, identify issues, and evaluate calibration quality in real time.

Motion Tracking

Along with an EMG sensor, the custom game controllers used at Limbitless Solutions also include an inertial measurement unit (IMU),

combining a gyroscope and accelerometer. At the time, no prior game at the organization had used the hardware despite it being a part of the controller,

meaning there was no prior implementation or documentation to reference. I was tasked with building this system from the ground up.

With no prior experience working with motion sensors, it seemed daunting to translate raw signal data into usable motion data. The core challenge

of motion tracking was computing device rotation from gyroscope and accelerometer signals.

Early Attempts

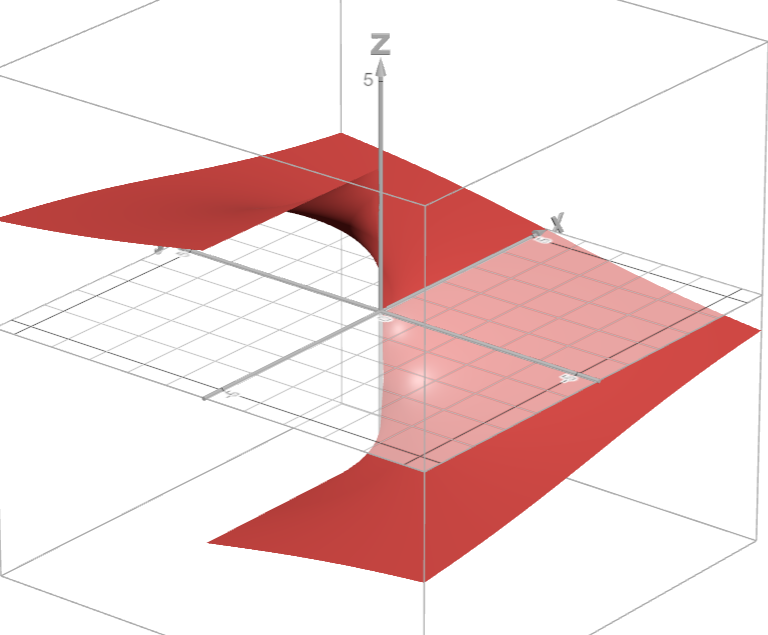

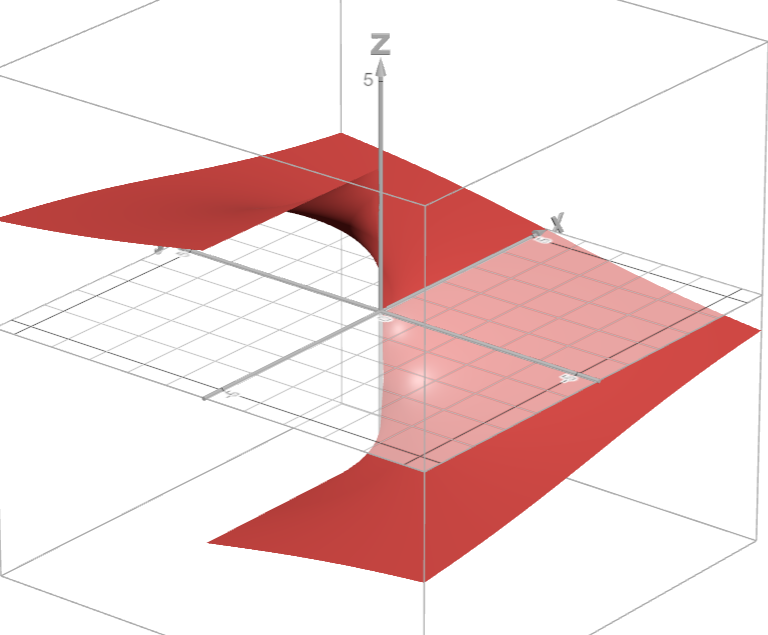

Drawing from my background in mathematics, I initially tried to take a trigonometric approach to determining orientation. Since an accelerometer should

measure 1G at rest, I attempted to calculate the angle of the device's tilt by applying atan2 to axes pairs. This method worked fairly well when the device

was still, or when it was moving within the same "octant" of rotation. However, the approach broke down near the axes due to singularities and instabilities in

the angle calculations. The arctangent graph below highlights the issue, showing a massive gap in the function along the negative X-axis.

Research - Quaternions and Physics

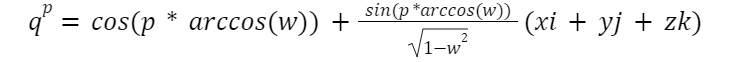

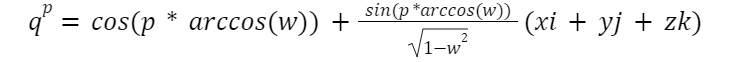

To overcome the limitations of basic trigonometry, I researched more advanced methods of representing orientation. I soon encountered quaternions, a concept

I had heard of in the past but found intimidating. After deep study, I developed a strong understanding and intuition for quaternions as tools for representing

3D rotation. Quaternions avoid common pitfalls with other rotation representations, like the aforementioned singularities or gimbal lock. I mastered key quaternion

concepts like how they encode angle-axis rotations, multiplication, conjugation, and the significance of rotation composition order.

Using this foundation, I was able to derive and implement mathematical formulas which proved critical to the motion tracking system.

I also furthered my understanding of the physics of IMUs. For instance, accelerometers measure proper acceleration.

When at rest relative to Earth, an accelerometer will detect the normal force pushing upward to keep it stationary. This finding was surprising to me at first,

but checked out with my observations.

I also explored gyroscope behavior and its tendency to drift over time. To address this, I also studied sensor fusion

techniques, which combine accelerometer and gyroscope data to improve accuracy over long periods of time.

Implementation

With my foundation set, I started developing the full data pipeline to enable motion controls. This began by processing raw IMU data, first by scaling it

into proper physical units and then by casting it from a stream of bytes into vectors. I then transformed the vectors from the IMU's coordinate system into

Unreal Engine 5's coordinate system, which had opposite handedness.

Using integration, I leveraged gyroscope data to estimate angular displacement over the short intervals between packets being sent. As mentioned before,

gyroscope data used this way often drifts over long periods of time, causing the orientation to diverge from reality. Accelerometer data can help by

providing a reference for the direction of gravity, but it too becomes unreliable during rapid movement or external accelerations.

To solve this, I combined gyroscope and accelerometer inputs, prioritizing gyroscope data during fast motions and relying on accelerometer data to correct

long-term drift. This results in stable and accurate orientation tracking.

With an established orientation, I rotate the accelerometer vector to isolate and subtract the gravity component, which estimates coordinate acceleration.

This acceleration is more intuitive as it is caused by forces other than gravity, such as the player.

This filtered data is far more useful for gameplay logic than raw proper acceleration.

Limitations

In evaluating the effectiveness of the system, I identified a key limitation. There is a slow but inevitable drift in orientation around the vertical axis.

This is because relying solely on an accelerometer's gravity measurement cannot determine the device's bearing, or where it's facing. Accurately measuring

bearing requires additional sensors that the controller does not have, such as a magnetometer or compass.

Other commercially successful motion-controlled games that do not have compass hardware, such as those on the Nintendo Switch, address this issue

by periodically resetting the device's bearing during gameplay. This is often done very subtly, maintaining a smooth player experience. As such,

clever game design can overcome this technical limitation.

Editor Tools

Similar to the flex value graph, I developed similar graphing tools to visualize all motion sensor data streams over time.

These graphs proved essential for efficiently testing, debugging, and fine-tuning the motion sensor systems.

Team Collaboration

One of my favorite parts about working on Super Bionic Bash has been the opportunity to collaborate with such a talented team of all different skills and disciplines.

I've closely with programmers to co-design core systems, like the Bluetooth backend, through problem-solving, collaborative debugging, and architectural planning.

I also regularly support artists and designers by helping them navigate technical aspects of Unreal, ensuring their work is smoothly integrated into the game.

Beyond day-to-day collaboration, I also regularly attended team meetings and frequently presented my work to other teams within Limbitless Solutions.